Welcome back to The AGI Observer. In Part 2 we follow our Introduction and Part 1 on Scaling Deep Learning and examine the Neuro-Symbolic pathway: what it is in plain language, why it matters, who is pushing it forward, where the bottlenecks are, and how we will know it is working. As before, we end with a sample educational virtual portfolio to show how this research maps to real companies and infrastructure. Educational only; not financial advice.

Executive summary

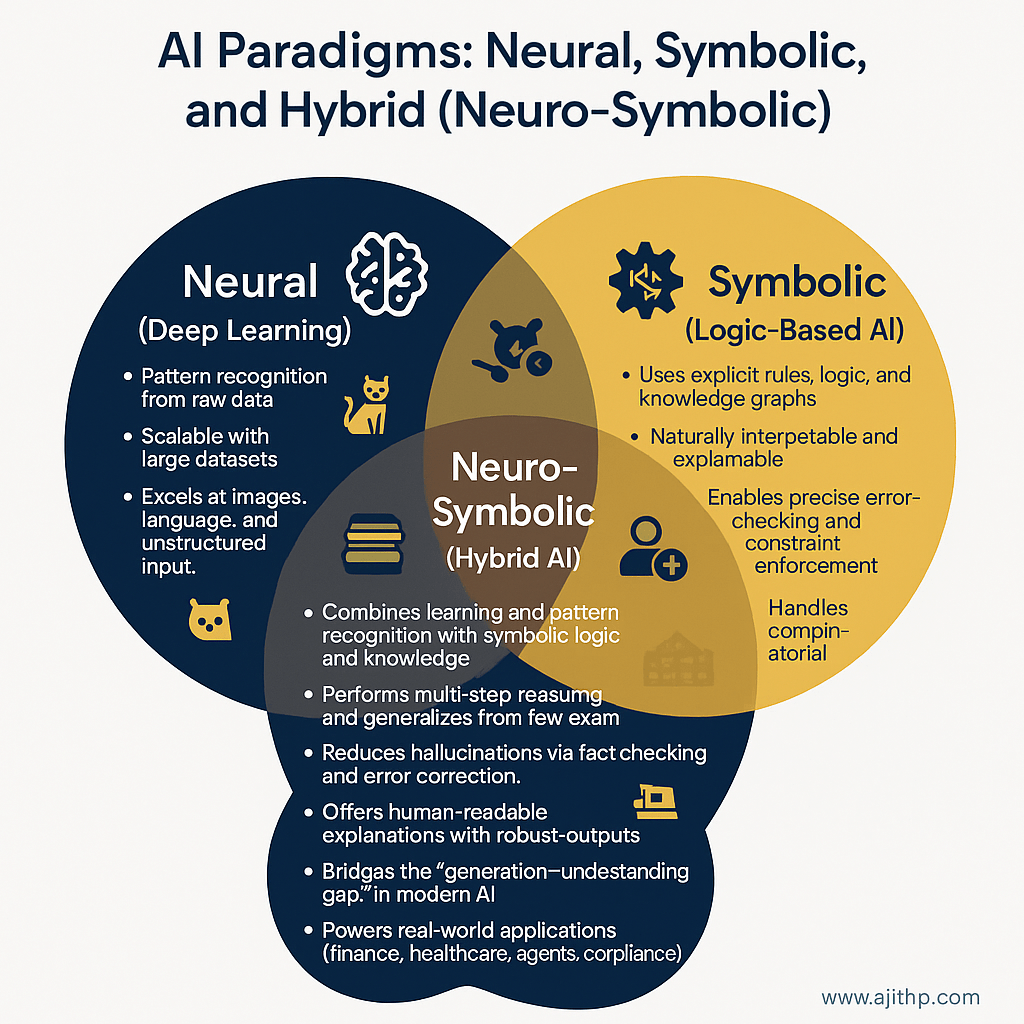

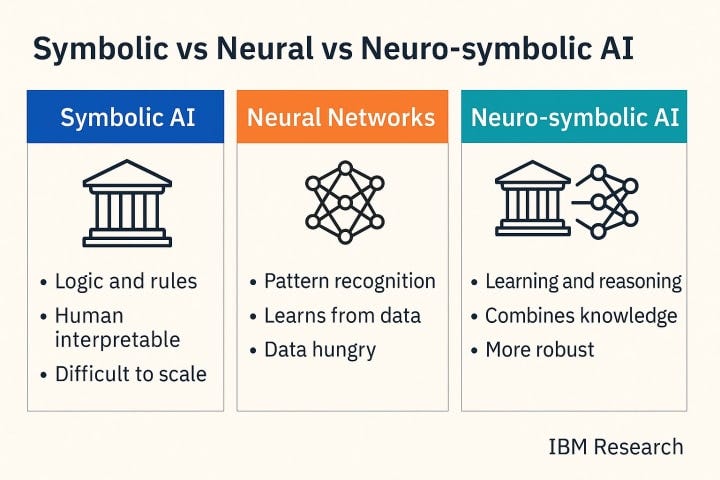

Thesis. Neuro-symbolic AI blends two strengths in one system: neural networks that learn patterns from data and symbolic components that use rules, logic, and knowledge to check facts and plan steps. Why it matters. This approach targets the weaknesses of pure pattern learning, such as brittle logic and hallucinations, and is attractive for domains that need reliability, traceability, and compliance. Near term. Expect copilots that can show their working, check answers against rule sets, and follow plans across tools. Risk. The integration is hard – connecting fast neural learners with precise symbolic reasoners can add latency, engineering complexity, and maintenance overhead. What to watch. Systems that explain their steps, pass harder reasoning tests, stay fresh with live knowledge, and deliver enterprise-grade reliability.

1) What “neuro-symbolic” means in practice

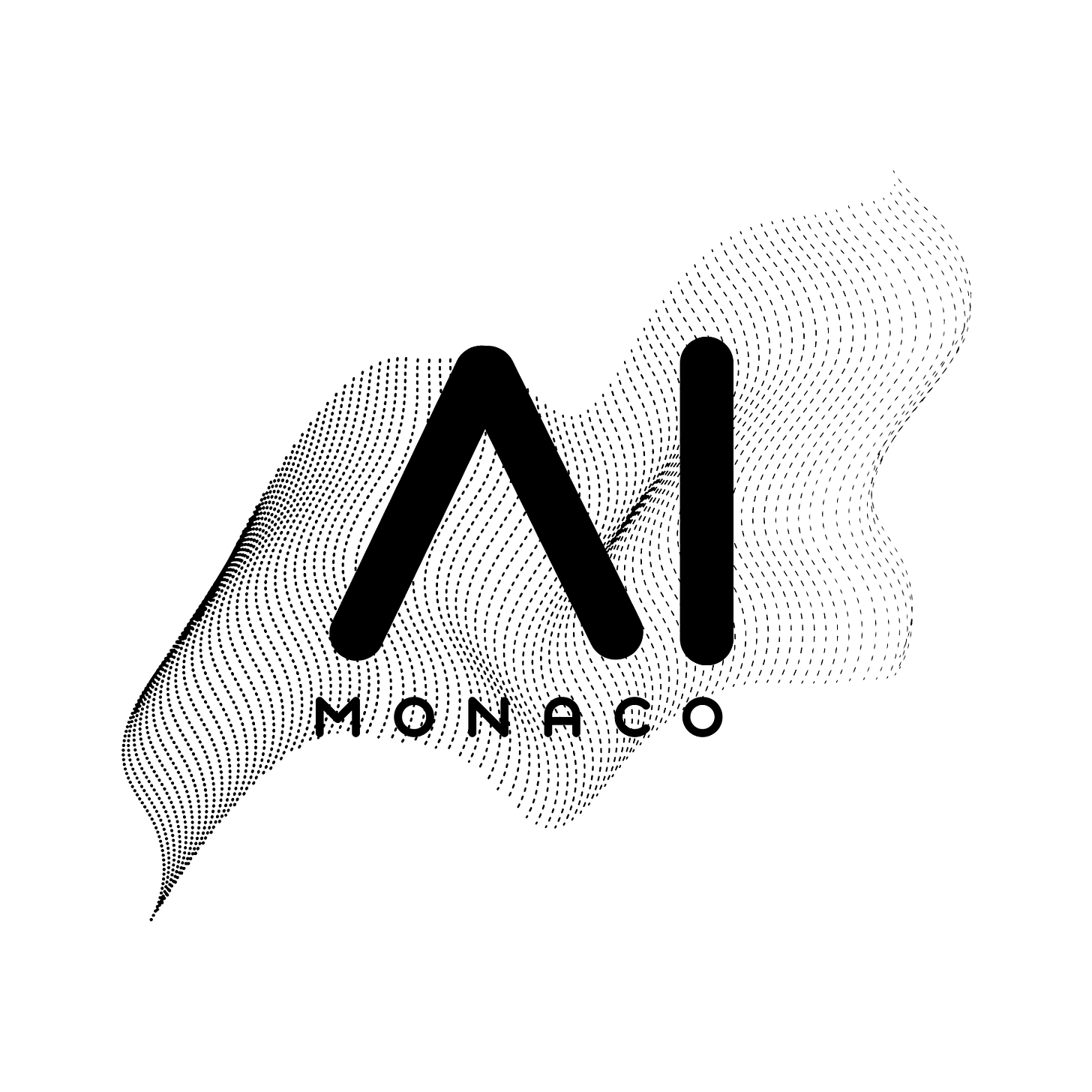

-

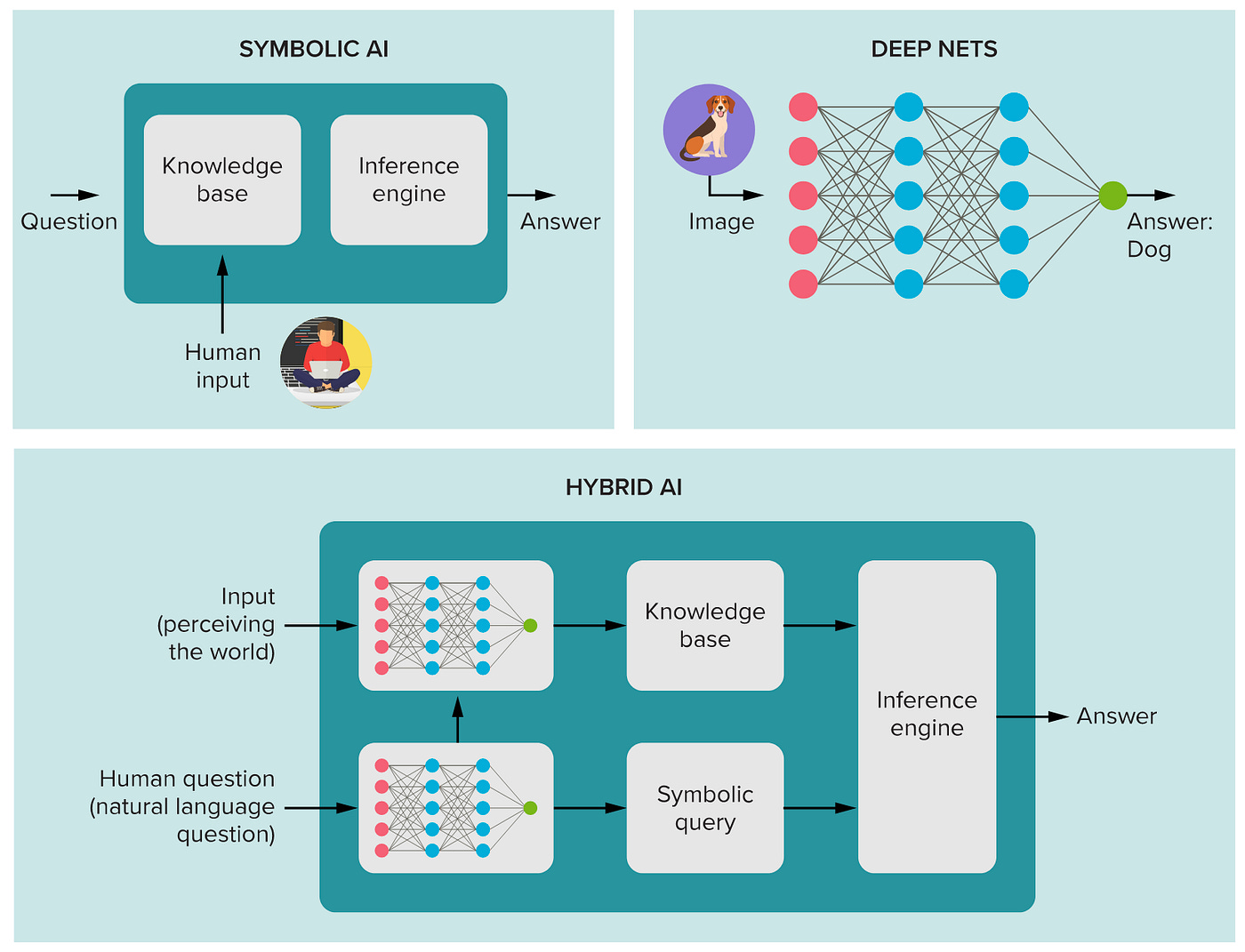

Neural side – learn from examples. Find patterns in language, images, code, and behavior.

-

Symbolic side – reason with knowledge. Use rules, constraints, and structured facts to verify, plan, and reconcile conflicts.

-

The loop. The neural model drafts an answer, the symbolic layer checks it against rules or a knowledge base, flags issues, and guides a corrected answer.

2) Why this pathway matters

-

Lower hallucinations. Answers can be checked against explicit rules and sources.

-

Better reasoning. Multi-step tasks benefit from plans and constraints, not only pattern matching.

-

Auditability. The system can show intermediate steps and cite where facts came from.

-

Fit for regulated use. Finance, health, law, and government need explanations and control.

3) How it works under the hood

-

Structured knowledge. Curated facts stored as entities and relationships, so the system can look up exact information.

-

Rules and constraints. If-then logic, calculators, and validators that must be satisfied before a result is accepted.

-

Program calls. Instead of guessing a number, the model calls a function to compute it, then includes the result and the steps.

-

Planners. A simple plan is created first, then executed and corrected if checks fail.

4) Where we are today

-

Copilots with guardrails. Draft then verify, with visible citations.

-

Tool-using agents. Models call search, code, spreadsheets, and databases, while checkers enforce constraints.

-

Early wins. Document review, policy compliance, data extraction with validation, analytics that must reconcile to ledger totals, and code assistants that run tests before proposing patches.

5) Bottlenecks and proposed fixes

-

Integration cost. Bridging two worlds can slow iteration – use narrow, high-value rule sets first.

-

Freshness. Knowledge bases go stale – attach live connectors and schedule updates.

-

Coverage gaps. Rules miss edge cases – log failures and promote them into new rules.

-

Latency. Extra checks add time – cache results and parallelize independent checks.

-

Evaluation. Standard benchmarks rarely test explainability – include process-based tests that score plans, sources, and final answers.

6) Timelines with explicit uncertainty

-

12-24 months. Strong adoption for verifiable copilots in legal, finance, procurement, and compliance.

-

2-4 years. Widely used planning-and-checking agents for operations and analytics, with clear audit trails.

-

Beyond 4 years. Hybrid systems converge with scaled models, pairing broad understanding with dependable logic and memory.

7) Risks and how to manage them

-

False sense of safety. A green check can hide gaps – keep humans in the loop for high-impact actions.

-

Rule brittleness. Overly rigid rules break with new data – budget for upkeep and monitoring.

-

Privacy and access control. Knowledge bases may contain sensitive information – enforce permissions and logging.

-

Cost creep. More components can raise bills – measure value per task, not only raw usage.

8) Where it helps first

-

Finance. Policy checks, disclosure control, reconciliations, risk narratives with sources.

-

Law. Clause extraction with rule validation, explainable contract comparisons.

-

Healthcare. Coding and claims assistance with guidelines, safety summaries with cited literature.

-

Public sector. Policy Q&A backed by official registers and explicit decision rules.

-

Engineering. Spec compliance, unit checks, and calculations inside design assistants.

9) Sample educational virtual portfolio – Neuro-Symbolic stack

Education only – not financial advice. Equal weight within buckets.

Knowledge and graph platforms (25%)

-

Microsoft (MSFT) – enterprise graph and knowledge integration inside productivity and cloud.

-

Oracle (ORCL) – data, rules, and knowledge features for regulated workloads.

-

SAP (SAP) – business rules and knowledge in enterprise processes.

-

MongoDB (MDB) – flexible data structures used for knowledge applications.

-

Elastic (ESTC) – search and retrieval across documents with structured fields.

Reasoning, rules, and verification (20%)

-

ServiceNow (NOW) – workflow engines and policy automation.

-

Palantir (PLTR) – decision platforms with model tracing and controls.

-

Intapp (INTA) – risk and compliance logic in professional services.

-

Alteryx (AYX) – analytic pipelines with checks and governance.

Developers, tooling, and data quality (20%)

-

Datadog (DDOG) – observability for complex AI pipelines.

-

GitLab (GTLB) – code and policy enforcement in AI-assisted development.

-

Snowflake (SNOW) – high-quality data sharing with governance.

-

Databricks partner exposure via MSFT/ORCL ecosystems.

Cloud and integration backbone (20%)

-

Amazon (AMZN) – cloud services for knowledge, rules, and tool calling.

-

Alphabet (GOOGL) – search, retrieval, and knowledge integrations.

-

Microsoft (MSFT) – again as the common deployment plane.

Application beachheads (15%)

-

Thomson Reuters (TRI) – legal and tax copilots with source citations.

-

RELX (RELX) – risk and scientific content integrations.

-

Intuit (INTU) – financial workflows with rules and checks.

Closing thought

Scaling gives breadth. Neuro-symbolic gives structure. The path to dependable intelligence likely needs both. The nearer we get to high-stakes decisions, the more valuable explicit knowledge, rules, and explanations become.

Prepared by AI Monaco

AI Monaco is a leading-edge research firm that specializes in utilizing AI-powered analytics and data-driven insights to provide clients with exceptional market intelligence. We focus on offering deep dives into key sectors like AI technologies, data analytics and innovative tech industries.